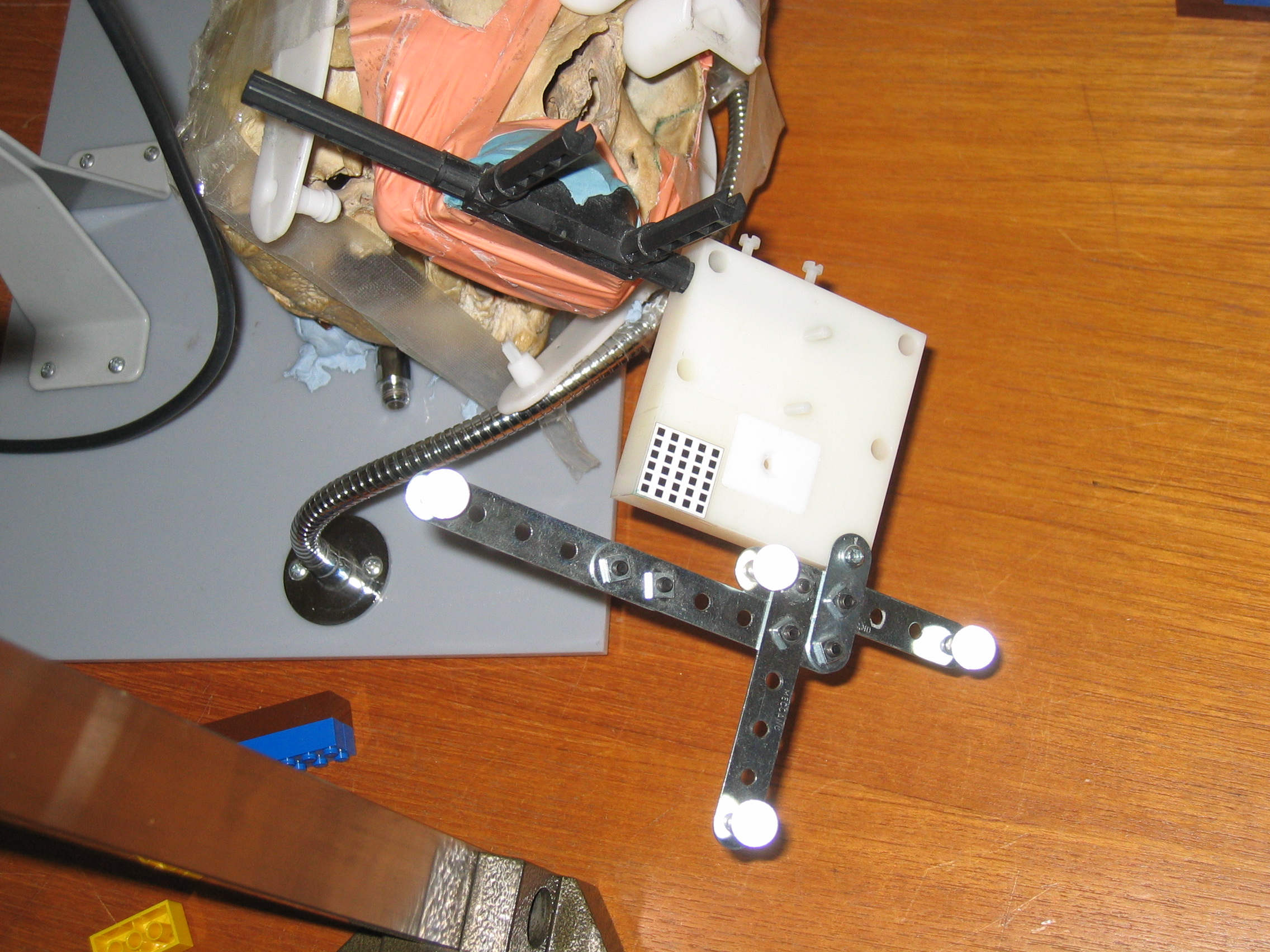

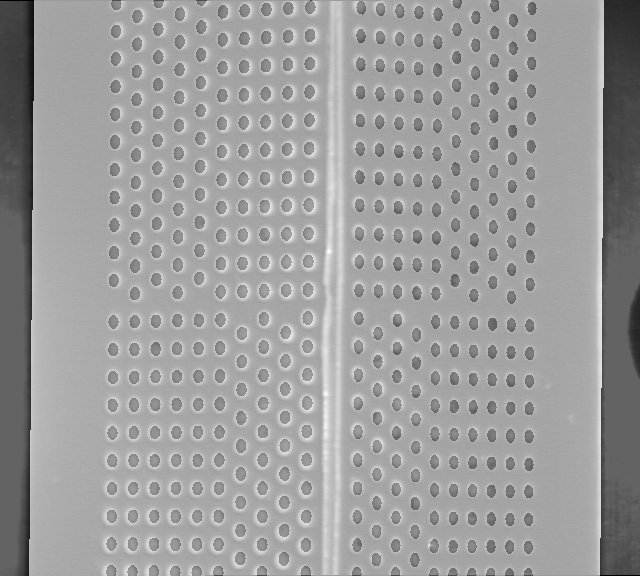

Augmented Reality (AR) applications typically involve a real space and a virtual space which should be co-registered. Both spaces have an 'observer' usually in the form of some camera system. In the real space our 'camera' is typically the surgical microscope's optical system or an endoscope's optical element. The virtual space is a 'computer graphics'space - e.g. implemented in C++ with OpenGL - with a 'virtual' camera. The calibration step aims to align the real camera and the virtual camera. This alignment requires the knowledge of six extrinsic parameters and five intrinsic parameters. The extrinsic parameters are the six spatial degrees of freedom (dof's), i.e. three for position (translational dof's) and three for orientation (rotational dof's). The intrinsic parameters are the effective focal length, the optical center (2 parameters in x,y), the scale factor and the first order radial distortion factor (second order radial distortion can be ignored in most practical cases). Several calibration algorithms have been designed for this purpose. We used a modified version of the well-established Tsai's calibration algorithm. To perform calibration, a mouthpiece (VBH - Vogele Bale Hohner) with calibration pattern is inserted in the patient's mouth (see left image below). A virtual counterpart of this pattern exists in the virtual space. A snapshot of a phantom with mounted calibration device (flat pattern) is shown below (middle image below). The dot pattern is segmented and image coordinates of the dots on the real image are matched with their real world coordinates. This is sufficient to derive the eleven parameters, hence to define the pose of the camera. Errors on calibration are of sub-pixel level, provided the object is at an angle of inclination of at least 45 degrees. The right image below shows an overlay of the virtual and real calibration object (roof-shaped).

|